주메뉴

- About IBS 연구원소개

-

Research Centers

연구단소개

- Research Outcomes

- Mathematics

- Physics

- Center for Underground Physics

- Center for Theoretical Physics of the Universe (Particle Theory and Cosmology Group)

- Center for Theoretical Physics of the Universe (Cosmology, Gravity and Astroparticle Physics Group)

- Dark Matter Axion Group

- Center for Artificial Low Dimensional Electronic Systems

- Center for Theoretical Physics of Complex Systems

- Center for Quantum Nanoscience

- Center for Exotic Nuclear Studies

- Center for Van der Waals Quantum Solids

- Center for Relativistic Laser Science

- Chemistry

- Life Sciences

- Earth Science

- Interdisciplinary

- Center for Neuroscience Imaging Research (Neuro Technology Group)

- Center for Neuroscience Imaging Research (Cognitive and Computational Neuroscience Group)

- Center for Algorithmic and Robotized Synthesis

- Center for Nanomedicine

- Center for Biomolecular and Cellular Structure

- Center for 2D Quantum Heterostructures

- Institutes

- Korea Virus Research Institute

- News Center 뉴스 센터

- Career 인재초빙

- Living in Korea IBS School-UST

- IBS School 윤리경영

주메뉴

- About IBS

-

Research Centers

- Research Outcomes

- Mathematics

- Physics

- Center for Underground Physics

- Center for Theoretical Physics of the Universe (Particle Theory and Cosmology Group)

- Center for Theoretical Physics of the Universe (Cosmology, Gravity and Astroparticle Physics Group)

- Dark Matter Axion Group

- Center for Artificial Low Dimensional Electronic Systems

- Center for Theoretical Physics of Complex Systems

- Center for Quantum Nanoscience

- Center for Exotic Nuclear Studies

- Center for Van der Waals Quantum Solids

- Center for Relativistic Laser Science

- Chemistry

- Life Sciences

- Earth Science

- Interdisciplinary

- Center for Neuroscience Imaging Research (Neuro Technology Group)

- Center for Neuroscience Imaging Research (Cognitive and Computational Neuroscience Group)

- Center for Algorithmic and Robotized Synthesis

- Center for Nanomedicine

- Center for Biomolecular and Cellular Structure

- Center for 2D Quantum Heterostructures

- Institutes

- Korea Virus Research Institute

- News Center

- Career

- Living in Korea

- IBS School

News Center

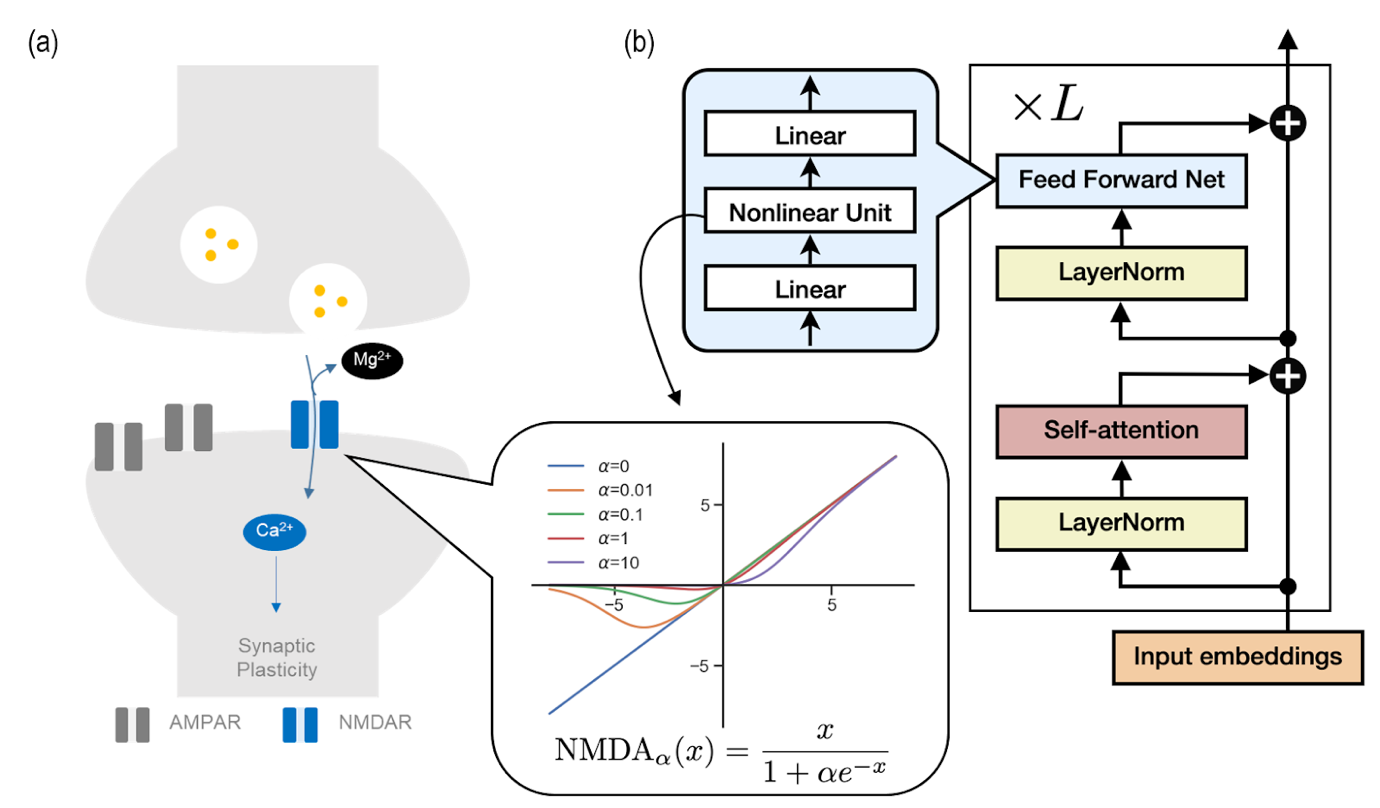

AI's memory-forming mechanism found to be strikingly similar to that of the brainAn interdisciplinary team consisting of researchers from the Center for Cognition and Sociality and the Data Science Group within the Institute for Basic Science (IBS) revealed a striking similarity between the memory processing of artificial intelligence (AI) models and the hippocampus of the human brain. This new finding provides a novel perspective on memory consolidation, which is a process that transforms short-term memories into long-term ones, in AI systems. In the race towards developing Artificial General Intelligence (AGI), with influential entities like OpenAI and Google DeepMind leading the way, understanding and replicating human-like intelligence has become an important research interest. Central to these technological advancements is the Transformer model [Figure 1], whose fundamental principles are now being explored in new depth. The key to powerful AI systems is grasping how they learn and remember information. The team applied principles of human brain learning, specifically concentrating on memory consolidation through the NMDA receptor in the hippocampus, to AI models. The NMDA receptor is like a smart door in your brain that facilitates learning and memory formation. When a brain chemical called glutamate is present, the nerve cell undergoes excitation. On the other hand, a magnesium ion acts as a small gatekeeper blocking the door. Only when this ionic gatekeeper steps aside, substances are allowed to flow into the cell. This is the process that allows the brain to create and keep memories, and the gatekeeper's (the magnesium ion) role in the whole process is quite specific. The team made a fascinating discovery: the Transformer model seems to use a gatekeeping process similar to the brain's NMDA receptor [see Figure 1]. This revelation led the researchers to investigate if the Transformer's memory consolidation can be controlled by a mechanism similar to the NMDA receptor's gating process. In the animal brain, a low magnesium level is known to weaken memory function. The researchers found that long-term memory in Transformer can be improved by mimicking the NMDA receptor. Just like in the brain, where changing magnesium levels affect memory strength, tweaking the Transformer's parameters to reflect the gating action of the NMDA receptor led to enhanced memory in the AI model. This breakthrough finding suggests that how AI models learn can be explained with established knowledge in neuroscience. C. Justin LEE, who is a neuroscientist director at the institute, said, “This research makes a crucial step in advancing AI and neuroscience. It allows us to delve deeper into the brain's operating principles and develop more advanced AI systems based on these insights.” CHA Meeyoung, who is a data scientist in the team and at KAIST, notes, “The human brain is remarkable in how it operates with minimal energy, unlike the large AI models that need immense resources. Our work opens up new possibilities for low-cost, high-performance AI systems that learn and remember information like humans.” What sets this study apart is its initiative to incorporate brain-inspired nonlinearity into an AI construct, signifying a significant advancement in simulating human-like memory consolidation. The convergence of human cognitive mechanisms and AI design not only holds promise for creating low-cost, high-performance AI systems but also provides valuable insights into the workings of the brain through AI models.

Notes for editors

- References

- Media Contact

- About the Institute for Basic Science (IBS)

- About Center for Cognition and Sociality & Data Science Group

|

| Next | |

|---|---|

| before |

- Content Manager

- Public Relations Team : Yim Ji Yeob 042-878-8173

- Last Update 2023-11-28 14:20